In the era of big data, where vast amounts of information are generated every second, the ability to process and analyze data efficiently is crucial. RAM (Random Access Memory) plays a significant role in the performance of big data analytics and processing systems. Understanding how RAM impacts these processes can help organizations optimize their systems for better performance and faster insights.

- Understanding Big Data Analytics

What is Big Data Analytics?

- Definition: Big data analytics involves examining large and complex data sets to uncover hidden patterns, correlations, and other insights.

- Applications: It is used in various fields, including finance, healthcare, marketing, and more, to make data-driven decisions.

Challenges in Big Data Analytics

- Volume: The sheer volume of data can be overwhelming, requiring significant computational resources.

- Velocity: The speed at which data is generated and processed demands quick and efficient data handling.

- Variety: Data comes in various formats, including structured, semi-structured, and unstructured data.

- The Role of RAM in Big Data Processing

Speed and Performance

- Fast Data Access: RAM provides fast read and write access to data, which is essential for real-time analytics and quick data processing.

- Low Latency: RAM's low latency compared to other storage types (like HDDs and SSDs) ensures that data can be accessed and processed without delay.

In-Memory Computing

- Definition: In-memory computing involves storing data in RAM rather than on traditional disk-based storage.

- Advantages: This approach significantly speeds up data processing and analytics by reducing the time needed to read and write data from slower storage media.

- RAM Requirements for Big Data Applications

System Memory Capacity

- High-Capacity RAM: Big data applications require high-capacity RAM to store and process large data sets efficiently. Systems often need tens or hundreds of gigabytes of RAM.

- Scalability: As data volumes grow, the ability to scale RAM capacity becomes critical to maintaining performance.

RAM and Parallel Processing

- Multi-Core Processing: Modern big data analytics platforms use multi-core processors to handle parallel processing tasks. Sufficient RAM is needed to ensure each core can access data quickly.

- Distributed Computing: In distributed computing environments, such as Hadoop and Spark, RAM is crucial for each node in the cluster to process its portion of the data set effectively.

- Optimizing RAM Usage in Big Data Systems

Memory Management Techniques

- Garbage Collection: Efficient garbage collection routines help manage memory usage by freeing up RAM that is no longer needed.

- Memory Pools: Using memory pools can help allocate and deallocate memory more efficiently, reducing the overhead of frequent memory operations.

Data Caching

- Importance of Caching: Caching frequently accessed data in RAM can significantly improve performance by reducing the need to repeatedly read data from slower storage.

- Implementation: Implementing effective caching strategies, such as LRU (Least Recently Used) or LFU (Least Frequently Used) caching, can optimize RAM usage.

- Hardware Considerations for Big Data RAM

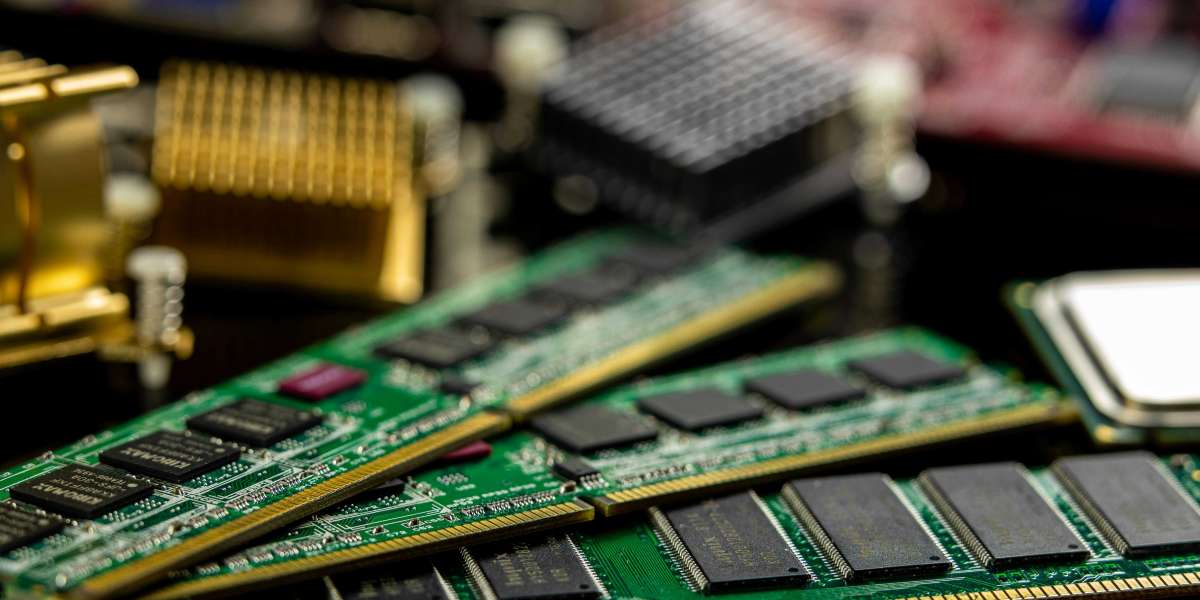

Choosing the Right RAM

- DDR4 and DDR5: Modern systems use DDR4 or DDR5 RAM, which offer higher speeds and better performance than older DDR3 modules.

- ECC RAM: Error-Correcting Code (ECC) RAM is recommended for big data applications to ensure data integrity and reduce the risk of data corruption.

Upgrading RAM

- Capacity Planning: Organizations should plan for future growth and upgrade RAM capacity as needed to handle increasing data volumes.

- Compatibility: Ensure that the RAM modules are compatible with the system's motherboard and other hardware components.

- Real-World Applications and Case Studies

Financial Services

- Risk Analysis: Financial institutions use big data analytics to assess risks and make informed investment decisions. High-capacity RAM enables faster processing of complex financial models.

- Fraud Detection: Real-time fraud detection systems rely on fast data processing, which is facilitated by ample RAM.

Healthcare

- Patient Data Analysis: Healthcare providers analyze large volumes of patient data to improve diagnostics and treatment plans. RAM plays a critical role in managing and processing this data efficiently.

- Genomic Research: Genomic data analysis requires significant computational power and memory capacity, making RAM a crucial component in research systems.

E-Commerce

- Customer Behavior Analysis: E-commerce platforms analyze customer behaviour and purchase patterns to personalize recommendations. Fast data processing enabled by sufficient RAM improves the accuracy and speed of these analyses.

- Inventory Management: Real-time inventory management systems depend on quick data processing to keep track of stock levels and optimize supply chains.

The Bottom Line

RAM is a critical component in the performance of big data analytics and processing systems. Its speed, low latency, and capacity for in-memory computing make it indispensable for handling the vast volumes, velocities, and varieties of data characteristic of big data. By optimizing RAM usage and ensuring sufficient capacity, organizations can significantly enhance their big data capabilities. For a comprehensive selection of high-quality RAM modules and expert advice on optimizing your big data infrastructure, visit our RAM Category at Computer Parts HQ.